Happy Monday! Here’s what’s inside this week’s newsletter:

Deep dive: NVIDIA’s three-computer system for Physical AI, showing how dedicated training, simulation, and runtime platforms combine hardware, system software, and models to build and deploy real-world robotic intelligence.

Spotlights: Firgun Ventures launches a $250M fund focused on early growth-stage quantum companies, and a deep dive on the energy economics of inference.

Headlines: Semiconductor updates from SK hynix, TSMC, and imec, quantum networking and national initiatives, progress in photonic and neuromorphic hardware, and major AI data center and cloud projects.

Readings: Open chiplet and lifecycle approaches in semiconductors, quantum insights and investment outlooks, nonlinear photonic architectures, and neuromorphic systems for sensing and time synchronization.

Funding news: A quieter week concentrated in early-stage activity, with most financings in photonics and additional rounds in semiconductors and quantum, reflecting a pause in larger late-stage deals.

Bonus: Google’s accelerated TPU commercialization shows how diversified buyers and large-scale deployments could meaningfully challenge Nvidia’s dominance in AI compute.

Deep Dive: The Compute Stack Behind Physical AI – Inside NVIDIA’s System Architecture

Why Physical AI Is a Computing Challenge

Physical AI is not only a robotics story. It is a computing story at its core. As we outlined in our explainer on the emerging challenges of building hardware for Physical AI, bringing intelligence into real-world environments is redefining compute design, power efficiency, sensor integration, and verification workflows. Physical AI systems need hardware that can understand 3D environments, process multimodal data in real time, and operate reliably under strict latency and safety constraints.

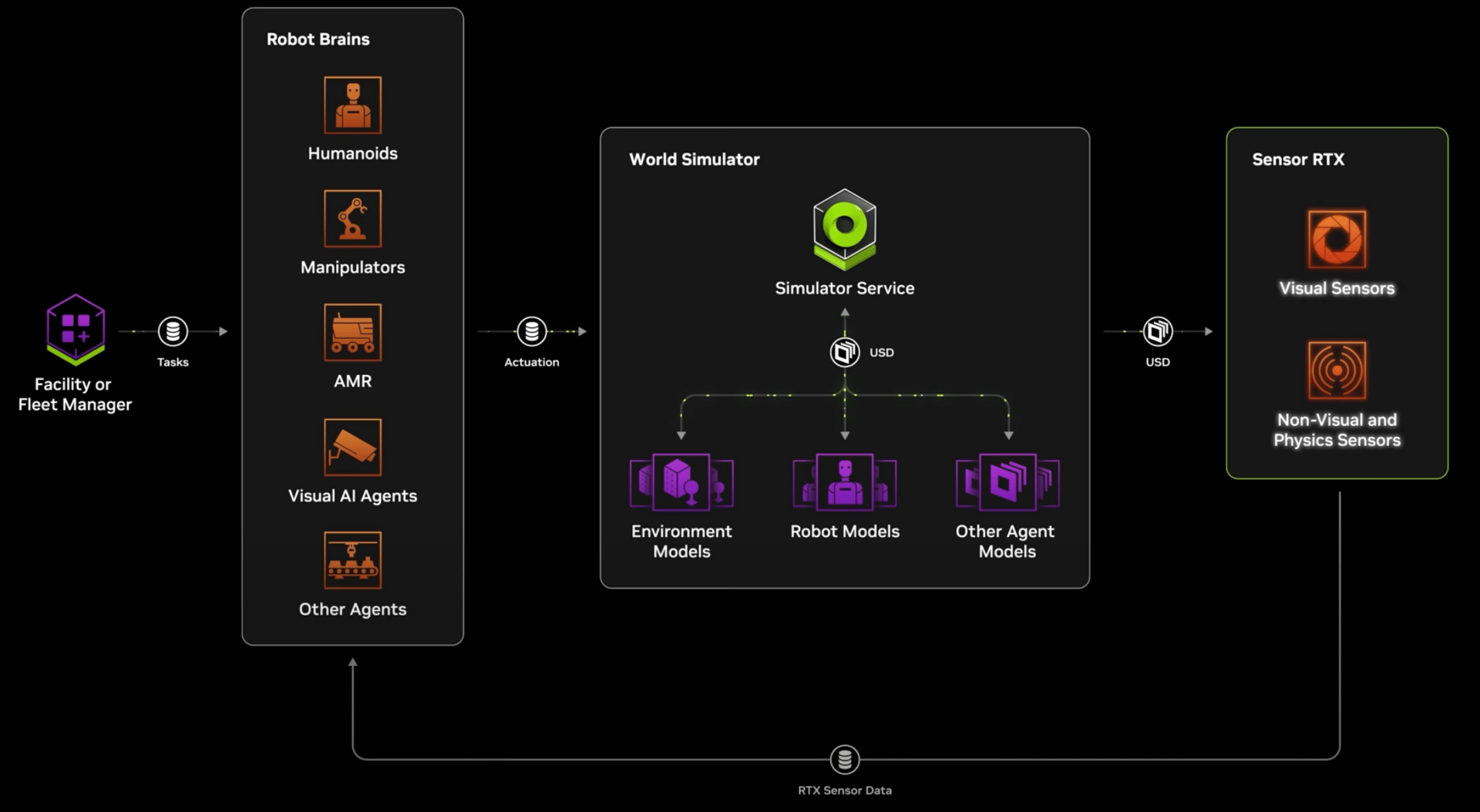

To support this shift, NVIDIA organizes the development pipeline for physical AI around three distinct computers (each combining hardware, system software, and models):

1) one for training,

2) one for simulation and synthetic data generation,

3) and one for on-robot inference.

Together, these systems form a full stack for building, testing, and deploying robotic intelligence.

NVIDIA’s Three Computers for Physical AI

Each tier serves a specific purpose, provides dedicated hardware or software, and passes a defined output to the next stage of the pipeline.

Tier 1: Training Computer

Purpose: Enable large-scale training of robot foundation models for multimodal perception, language reasoning, and policy learning. Models must learn to understand objects, environments, and actions across diverse data modalities.

Architecture:

Hardware

- NVIDIA DGX systems provide the compute backbone for model training

Software and Models

- NVIDIA Cosmos open world foundation models

- NVIDIA Isaac GR00T humanoid robot foundation models

Developers can pre-train models from scratch on DGX or fine-tune Cosmos and GR00T for robot-specific tasks.

Output: A pre-trained or post-trained robotic foundation model ready for validation, testing, and refinement through simulation.

Tier 2: Simulation and Synthetic Data Computer

Purpose: Enable scalable simulation and synthetic data generation to support policy learning and robust validation of robot behavior when real-world data is limited, costly, or unsafe to collect.

Architecture:

Hardware

- NVIDIA RTX PRO Servers powering Omniverse-based simulation workloads

Software and Models

- NVIDIA Omniverse for physically based simulation

- NVIDIA Isaac Sim for robotics simulation

- NVIDIA Isaac Lab for reinforcement and imitation learning

- NVIDIA Cosmos for synthetic perception data

These systems allow developers to generate training data and validate robot policies across diverse environments and edge cases before real-world deployment.

Output: A validated robot policy tested across simulated scenarios and ready for real-time deployment on physical hardware.

Tier 3: Runtime Computer

Purpose: Enable real-time onboard inference for perception, reasoning, motion planning, and control. Robots must process sensor data, understand their environment, and act within milliseconds while operating under tight power and size constraints.

Architecture:

Hardware

- NVIDIA Jetson AGX Thor as the onboard AI compute system for real-time processing

Software and Models

- Runtime models for perception, control, and language

- Multimodal inference frameworks for robot decision-making

The system processes onboard sensor inputs, reasons about the physical environment, plans next actions, and executes motion on the robot.

Output: Real-time robotic behavior executed on physical hardware, with perception, planning, and control running directly on the onboard compute system.

You can find further publications of NVIDIA covering physical AI here.

Source: What Is NVIDIA’s Three-Computer Solution for Robotics? (NVIDIA)

Spotlights

⚛️ Firgun Ventures Launches $250 Million VC Fund to Invest in Quantum (The Quantum Insider)

“Firgun Ventures announced a $70 million first close to launch what it calls the market’s first VC fund focused on early growth-stage quantum technology companies, backed by the Qatar Investment Authority. The fund, targeting $250 million, will invest globally in quantum computing, sensing, communications, and related applications in sectors such as healthcare, climate science, finance, and cybersecurity.

Founded by Kris Naudts and Zeynep Koruturk, the firm combines scientific, entrepreneurial, and financial expertise supported by an advisory council from leading institutions including Cambridge, Oxford, MIT, Google, the European Investment Bank, and the Wellcome Trust.”

If you want to learn what Zeynep and Kris look for in quantum startups and how they think about exit pathways, check out the interview on the Future of Computing blog!

🦾 The Energy Economics of Inference (Contrarian Ventures)

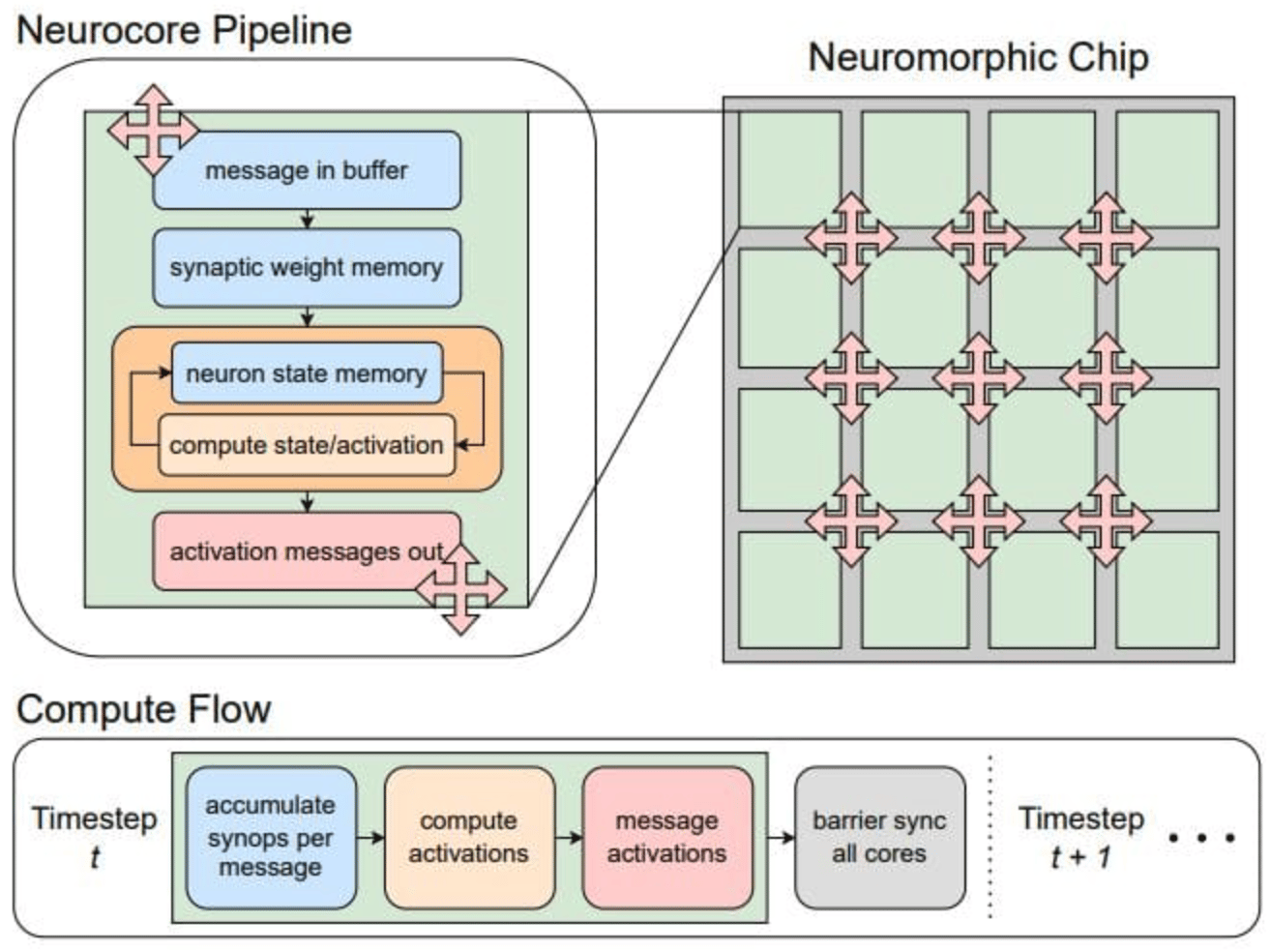

This deep dive explains that the real constraint in modern AI is increasingly energy per token rather than compute. As inference becomes sparse, memory-heavy and agentic, most energy is spent on memory access, data movement and idle time instead of FLOPs. This exposes a structural mismatch with GPUs, which were built for dense, regular workloads. The analysis points toward architectures designed around bandwidth and locality, including memory-centric chips, optical interconnects and neuromorphic systems that better match the event-driven nature of modern inference.

Headlines

Last week’s headlines featured new semiconductor developments from SK hynix, TSMC, and imec, advances in quantum, photonic, and neuromorphic hardware, major AI infrastructure moves in data centers and cloud, and the launch of Claude Opus 4.5.

🦾 Semiconductors

SK hynix launches honey banana HBM Chips to popularize semiconductors in Korea (Biz Chosun)

⚛️ Quantum

Terra Quantum Develops Laser System to Treat Cardiovascular Disease (The Quantum Insider)

Japan to Link Major Cities with 600-km Quantum Encryption Network (The Quantum Insider)

Scientists Set New Mobility Record in Quantum-Compatible Semiconductor (The Quantum Insider)

SEALSQ and Quobly announce collaboration to advance secure and scalable quantum technologies (SEALSQ)

Variational Double Bracket Flow with Sparse Pauli Dynamics Achieves <1% Error for 100-qubit Heisenberg Lattices (Quantum Zeitgeist)

10-Qubit Array Advances Germanium Quantum Computing (Quantum Zeitgeist)

Trumpf aims to improve its lasers with quantum computers (The Quantum Insider)

⚡️ Photonic / Optical

Light-Speed Ambition: GlobalFoundries acquires AMF and Infinilink to power the AI datacenter revolution (GlobalFoundries)

Single-Photon Switch Could Enable Photonic Computing (The Quantum Insider)

🧠 Neuromorphic

Neuromorphic Accelerator Performance Bottlenecks Modeled, Revealing 3.38x, 3.86x Gains through Optimized Sparsity (Quantum Zeitgeist)

A Korean Research Team Advances Neuromorphic Semiconductor Technology (BusinessKorea)

VLSI expert partners with Innatera to advance neuromorphic computing education (New Electronics)

Neuromorphic Hardware Enables Low-Power, Event-Based Pupil Tracking with Microsecond Resolution (Quantum Zeitgeist)

💥 Data Centers

Cooling issue at data center halts futures trading for CME Group (Data Center Dynamics)

☁️ Cloud

Google drops EU antitrust complaint against Microsoft as Brussels’ cloud probe intensifies (Capacity Global)

🤖 AI

Introducing Claude Opus 4.5 (Anthropic)

Readings

This reading list covers Europe’s diamond ambitions, open chiplet designs, quantum insights from diamond defects, nonlinear photonic architectures, neuromorphic systems with time sync and cooling, and the AI-driven shift in data center buildouts.

🦾 Semiconductors

Europe’s diamond moment: A new semiconductor ecosystem in the making (tech.eu) (7 mins)

Toward Open-Source Chiplets for HPC and AI: Occamy and Beyond (arXiv) (37 mins)

Improving IC System Quality and Performance (SemiEngineering) (14 mins – Video)

The real-world impact of silicon lifecycle management on chip architectures (SemiEngineering) (24 mins)

⚛️ Quantum

Diamond defects, now in pairs, reveal hidden fluctuations in the quantum world (Princeton Engineering) (7 mins)

Bain’s Quantum Computing Report: Advances to Real-World Applications (Quantum Zeitgeist) (12 mins)

What is the difference between a TPU (Tensor Processing Unit) and a QPU (Quantum Processor)? (Quantum Zeitgeist) (13 mins)

⚡️ Photonic / Optical

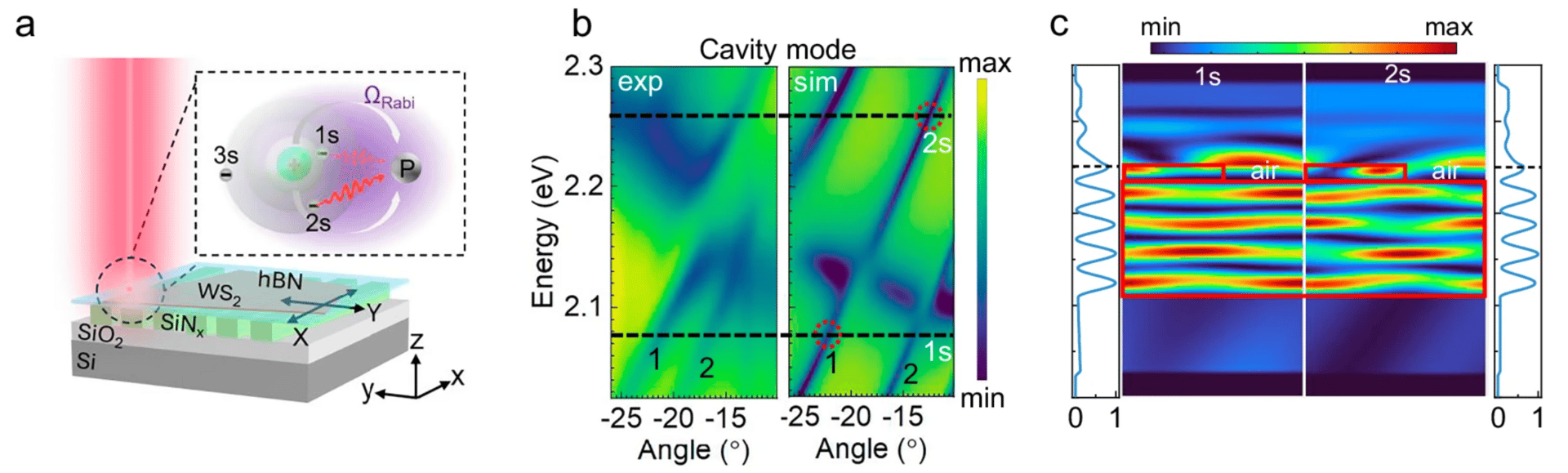

On-chip photonic crystal dressed Rydberg exciton polaritons with enhanced nonlinearity in monolayer WS2 (Nature Communications) (57 mins)

Photonic fully-connected hybrid beamforming using microring weight banks (Nature Communications) (64 mins)

🧠 Neuromorphic

Neuromorphic detection and cooling of microparticles in arrays (Nature Communications) (63 mins)

A deterministic neuromorphic architecture with scalable time synchronization (Nature Communications) (77 mins)

💥 Data Centers

75% of new data center projects target AI workloads — report (Data Center Knowledge) (13 mins)

AWS has more than 900 data centers — report (Data Center Dynamics) (5 mins)

Funding News

After several very active weeks, this one was noticeably quieter and unusually concentrated in early-stage activity. It was essentially a week of photonics. Did we miss any rounds?

Amount | Name | Round | Category |

|---|---|---|---|

€1M | Photonics | ||

$4.5M | Photonics | ||

€10M | Quantum | ||

$6.5M | Photonics | ||

$10M | Semiconductors |

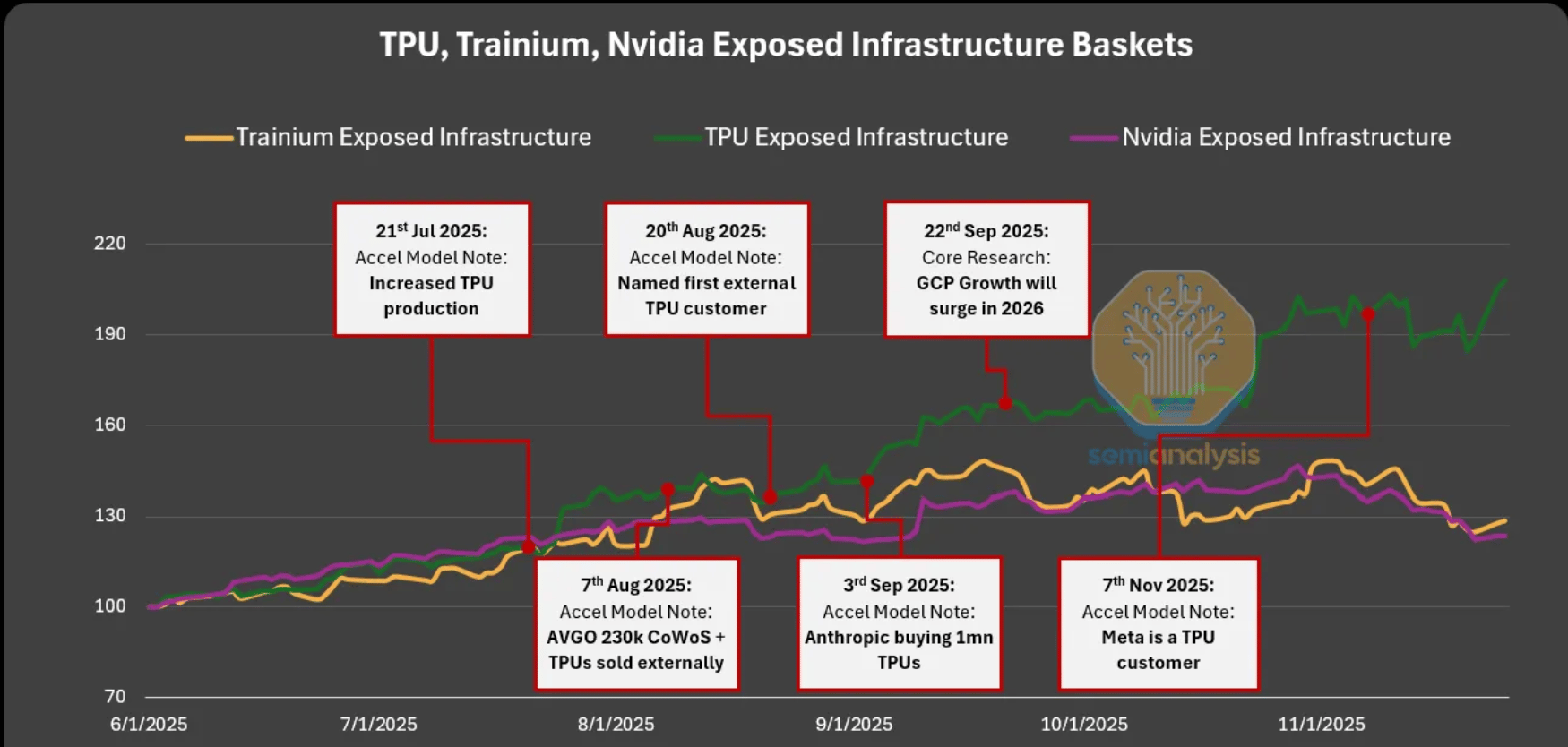

Bonus: Google’s TPU Push Is Becoming a Real Threat to NVIDIA

A few key points on Google’s aggressive push to commercialize TPUs and challenge NVIDIA’s dominance:

Google is now selling TPUs directly to multiple external firms, which represents a major shift in its commercialization strategy.

Claude 4.5 Opus and Gemini 3 (“the two best models in the world”) run mostly on Google TPUs and Amazon Trainium, not on NVIDIA chips.

Gemini 3 was trained entirely on TPUs, showing that TPU performance is competitive at the highest model quality levels.

Anthropic is deploying up to 1 million TPUs, with 400,000 purchased as hardware racks and 600,000 rented through GCP, positioning Google as a true merchant silicon vendor.

OpenAI has already lowered its NVIDIA TCO by about 30% simply by negotiating with the option to adopt TPUs, showing the pricing pressure Google can now apply.

Meta, SSI, xAI, and potentially OpenAI are now being targeted as external TPU customers, signalling a rapidly expanding TPU ecosystem beyond Google’s own workloads.

Source: TPUv7: Google Takes a Swing at the King (SemiAnalysis)