Happy Monday! Here’s what’s inside this week’s newsletter:

Deep dive: AWS Trainium3 enters the market as a credible challenger, outlining new compute, memory, and interconnect designs alongside a maturing software stack aimed at lowering the cost of large-scale training and inference.

Spotlights: Marvell accelerates optical connectivity with its acquisition of Celestial AI, and Moore Threads (often described as “China’s Nvidia”) surges in its market debut amid growing domestic chip ambitions.

Headlines: Semiconductor updates from UMC, Infineon, and Merck, a broad set of quantum hardware and cloud advances, progress in photonic and neuromorphic platforms, and major AI data center and cloud initiatives.

Readings: Semiconductor supply-chain and lifecycle security themes, new developments in quantum communication and compilation, and advances in photonic memory and spiking networks.

Funding news: A week with a high number of rounds, all above $20M, across semiconductors, quantum, photonics, data centers, and cloud, creating an unusual absence of small early-stage checks.

Bonus: Europe advances a €20B plan for sovereign AI Gigafactories, signaling a coordinated push to expand hyperscale compute capacity for frontier-model development and scientific workloads.

Deep Dive: Trainium3 – AWS as a Potential Challenger to NVIDIA?

Last week, AWS has introduced Trainium3, a new generation of its custom AI accelerator that aims to compete not on raw peak FLOPs alone but on performance per total cost of ownership. Trainium3 is designed around clear goals: faster time to market, lower cost per model, and an open software ecosystem that extends beyond AWS’s internal workloads.

Trainium3 improvements on Trainium2

Performance and efficiency improvements

- Compute performance: Up to 4.4× higher

- Memory bandwidth: Almost 4× higher

- Energy efficiency: 4× greater

Built on TSMC’s 3 nm N3P process, Trainium3 benefits from higher clocks and lower power consumption. AWS also redesigned the interconnect fabric, which is crucial for large models that are limited by cross-chip communication.

Rack-scale architecture improvements

- NL32x2 (air cooled): two racks, 64 chips total.

- NL72x2 (liquid cooled): two racks, 144 chips total.

Both designs move from the 2D and 3D torus topologies of Trainium2 to a switched scale-up fabric. This shift supports the communication patterns used in modern MoE architectures, where all-to-all collectives become dominant.

AWS’s TCO-first approach to scaling Trainium3

Total cost of ownership (TCO) refers to the combined cost of hardware, deployment, power, cooling, and operational overhead over the lifetime of a system. Trainium3 is designed around lowering these costs by enabling faster rollout, and reduced infrastructure requirements.

Hardware scaling approach

- Air-cooled racks allow deployment in legacy facilities.

- Cableless trays reduce assembly time and potential failure points.

- Multiple generations of switches provide supply chain resiliency.

- Redundant fabric lanes reduce downtime and simplify serviceability.

Software scaling approach

AWS is shifting its software stack from a weakness to a priority by rolling out a native PyTorch backend, open sourcing its compiler and kernel libraries, and improving performance tooling. This makes Trainium3 easier to adopt for mainstream ML teams and reduces the gap to CUDA workflows.

AWS as potential challenger to NVIDIA

Trainium3 is most competitive where throughput per dollar, energy efficiency, and large-scale deployment matter more than peak precision. Early customers report up to 50% lower training and inference costs. While it does not replace Nvidia for all workloads, it offers a credible alternative for cost-sensitive, large-cluster AI infrastructure and strengthens AWS’s position in frontier-scale compute.

Trainium3 also reduces some of the software friction that previously made it difficult to adopt alternatives to CUDA-based workflows. With its PyTorch-native backend, open-source compiler and kernel libraries, and expanded rack-scale systems, it lowers several practical barriers to integrating non-CUDA accelerators. These changes strengthen AWS’s position as a viable option for teams seeking diversity in their large-scale AI infrastructure.

Source: AWS Trainium3 Deep Dive | A Potential Challenger Approaching (SemiAnalysis)

Spotlights

⚡️ Marvell to Acquire Celestial AI, Accelerating Scale-up Connectivity for Next-Generation Data Centers (BusinessWire)

Marvell is acquiring Celestial AI to accelerate the shift from copper to all-optical interconnects in next-generation AI data centers. As systems move to multi-rack, any-to-any scale-up architectures, limitations in electrical links are driving a transition to optical I/O, not only between racks but also within systems and inside packages.

Celestial AI’s Photonic Fabric enables optical connectivity directly into XPUs via co-packaged chiplets, with more than 2× the power efficiency of copper, nanosecond-class latency, excellent thermal stability, and up to 16 Tb/s per chiplet.

The acquisition expands Marvell’s connectivity portfolio, and Celestial AI is already engaged with hyperscalers planning to use its technology. Marvell will acquire the company for approximately $3.25B upfront, plus up to $2.25B in contingent earnout.

🦾 Chinese challenger to Nvidia surges 425% in market debut (Financial Times)

Moore Threads, a Beijing-based chip company founded in 2020 by a former Nvidia executive, soared 425% on its Shanghai Star Market debut after raising $1.1B. The jump reflects confidence in China’s push to build domestic AI chips after US restrictions on Nvidia.

Still, Bernstein expects Moore Threads to generate about $58M in chip sales this year, far below Huawei and Nvidia at roughly $10B each. Nvidia’s China market share is projected to drop from 40% in 2025 to 8% next year.

The company shifted manufacturing from TSMC to SMIC after being added to the US entity list. Its IPO is part of a broader wave of upcoming Chinese AI chip listings.

Headlines

Last week’s headlines featured new semiconductor moves from UMC, Infineon, and Merck, a broad wave of quantum updates, advances in photonics and neuromorphic tech, major data center and cloud initiatives, and several new AI announcements.

🦾 Semiconductors

300-mm Ultrathin Wafer Handling: Presentation Highlights High-Temperature-Stable Temporary Bonding and IR Laser Debonding with Si Carriers (Brewer Science)

Team Invents New Thin Film Materials Based on Nobel Prize Winning Methods (University of Houston)

⚛️ Quantum

D-Wave Announces Formation of U.S. Government Business Unit (The Quantum Insider)

First Successful Proof Of Quantum Teleportation Between Two Different Quantum Dots (The Quantum Insider)

Pasqal Brings Neutral-Atom QPUs to Scaleway’s Quantum Cloud (The Quantum Insider)

Voyager and Infleqtion Partner to Launch Quantum Era in Space (The Quantum Insider)

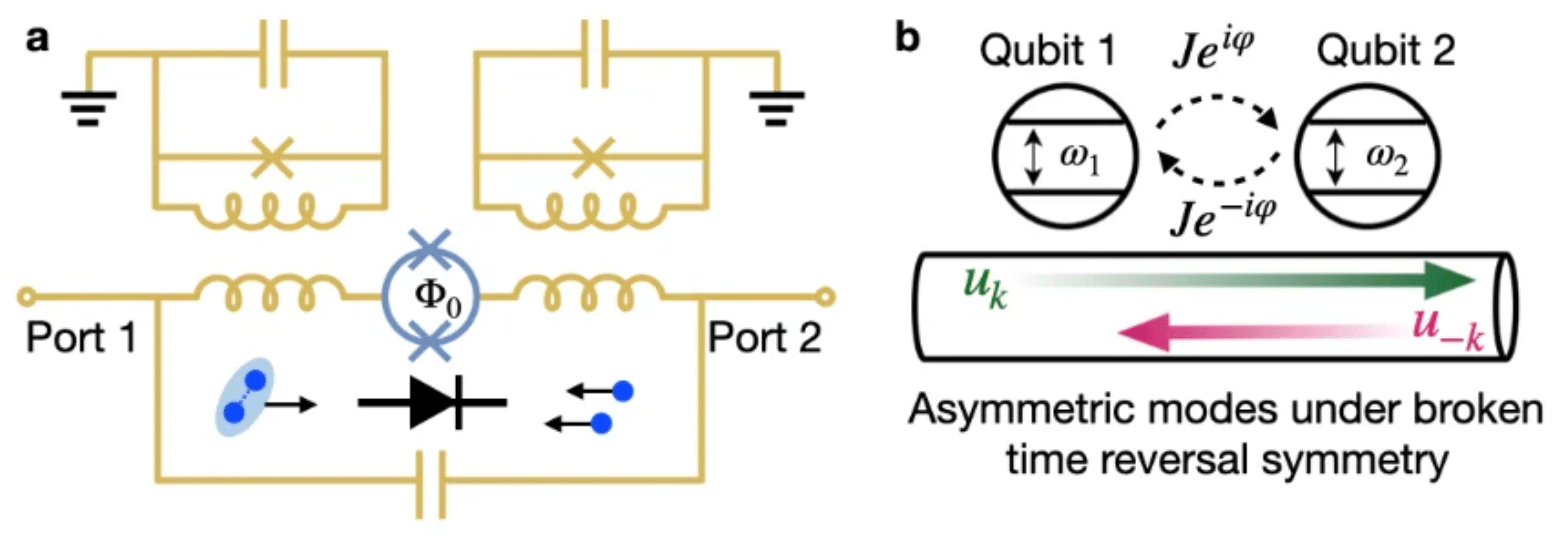

One Way or Another: UCLA Researchers Report On-Chip Diode Could Simplify Quantum Processor Architecture (The Quantum Insider)

Insider Data Trends Show Quantum Entering Industrial Deployment (The Quantum Insider)

AI Is Emerging as Quantum Computing’s Missing Ingredient, NVIDIA-Led Research Team Asserts (The Quantum Insider)

⚡️ Photonics

🧠 Neuromorphic

Innatera and 42 Technology partner to advance neuromorphic edge AI (New Electronics)

💥 Data Centers

Palantir teams with Nvidia, CenterPoint Energy for software to speed up AI data center construction (Reuters)

☁️ Cloud

AWS and Google Cloud collaborate on multicloud networking (Google Cloud)

EU digital ministers push against cloud-sovereignty definition that would block US providers (Euractiv)

📡 Networking

Keysight Introduces New Handheld Analyzer Enabling 120-MHz IQ Streaming for Gap-Free Signal Capture (Keysight)

🤖 AI

Mistral closes in on big AI rivals with Mistral 3 open weight frontier and small models (TechCrunch)

Snowflake and Anthropic Announce $200 Million Partnership to Bring Agentic AI to Global Enterprises (Snowflake)

Readings

This reading list covers overseas fab strategies and lifecycle security in semiconductors, long-range quantum key distribution, advances in photonic memory and spiking networks, and scalable neuromorphic processors.

🦾 Semiconductors

TSMC Overseas Fabs – A Success? (SemiAnalysis) (54 mins)

3D-printed chip packages could supercharge semiconductor manufacturing (University of Texas) (7 mins)

Interactive U.S. Semiconductor Ecosystem Map (Semiconductors.org) (15 mins)

Harnessing silicon lifecycle management for chip security (SemiEngineering) (18 mins)

Benefits and Challenges of Using Chiplets (SemiEngineering) (14 mins – Video)

⚛️ Quantum

Decoy-state Quantum Key Distribution Achieves Positive Secret Key Rates over 227 km with Telecom Single-Photon Source (Quantum Zeitgeist) (16 mins)

Exploiting Movable Logical Qubits Enables Reduced Circuit Depth for Lattice Surgery Compilation in Quantum Computation (Quantum Zeitgeist) (13 mins)

⚡️ Photonics

Photonic memory moves closer to practical deployment (Optics.org) (12 mins)

Photonic compute-in-wire: remotely driven photonic deep neural network with single nonlinear loop (EurekAlert!) (16 mins)

Photonic Spiking Neural Network Achieves 90% Pattern Classification with Lightweight Hardware and 80.5% Efficiency (Quantum Zeitgeist) (13 mins)

Enhanced photonic time crystals, temporal exceptional points for optical sensing (Quantum Zeitgeist) (12 mins)

The Weird Hybrid Material That Could Turbocharge Photonic Computing (SciTechDaily) (4 mins)

🧠 Neuromorphic

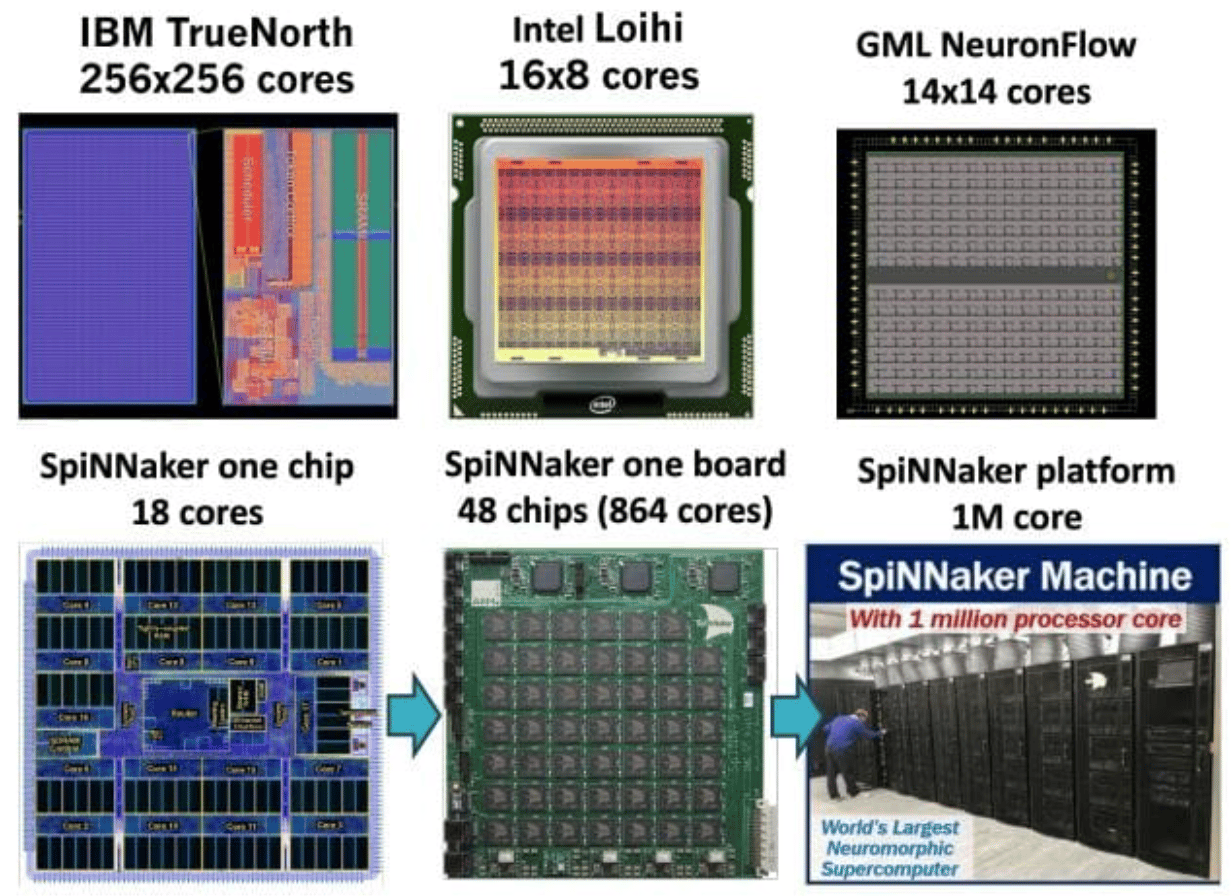

Risc-v Cores and Neuromorphic Arrays Enable Scalable Digital Processors for EdgeAI Applications (Quantum Zeitgeist) (7 mins)

Neuromorphic Computing Market Is Growing at a CAGR of 18.12% (openPR) (15 mins)

Loihi 2 Neuromorphic Hardware Enables Autonomous Robot Control with Reinforcement Learning and Spiking Neural Networks (Quantum Zeitgeist) (18 mins)

💥 Data Centers

New Data Center Developments: December 2025 (DataCenterKnowledge) (15 mins)

🤖 AI

Guided learning lets "untrainable" neural networks realize their potential (MIT CSAIL) (8 mins)

Funding News

Last week brought a broad mix of rounds across the stack, with most deals landing at the Seed and Series A stages, concentrated in semiconductors. Notably, all disclosed amounts were above $20M, creating a week with no typical early-stage small checks.

Amount | Name | Round | Category |

|---|---|---|---|

$23M+ | Semiconductors | ||

$28M | AI | ||

$32M | Quantum | ||

$33M | Photonics | ||

$35M | Semiconductors | ||

$46M | Semiconductors | ||

$50M | Decentralized Compute | ||

$110M | Quantum | ||

$100M | Data Centers | ||

$300M | Cloud | ||

$2B | Semiconductors | ||

Undisclosed | Semiconductors |

Bonus: EU moves ahead with €20B plan for AI Gigafactories

The European Commission has approved a new Memorandum of Understanding (MoU) with the EIB and EIF that kicks off work on four to five AI Gigafactories. These facilities are planned as large, energy-efficient compute hubs for training frontier models and enabling advanced scientific research.

The MoU gives practical momentum to the €20B InvestAI initiative announced earlier this year and shows that Europe is serious about expanding its own hyperscale AI infrastructure. A formal call to select the Gigafactory locations and consortia will follow once the EuroHPC regulation update is completed.

Memorandum of Understanding on AI Gigafactories (European Commission)