Happy Monday! Here’s what’s inside this week’s newsletter:

Deep dive: Six potential scenarios for the future of quantum computing, outlining how the field could evolve from 2025 onward. From unscalable architectures and classical algorithm breakthroughs to fault-tolerant systems and disruptive quantum leaps that could reshape computing.

Spotlights: The semiconductor industry closes in on the 400 Gb/s photonics milestone, and Thiel-funded U.S. startup Substrate raises $100 M to challenge ASML’s dominance in lithography tools.

Headlines: Major semiconductor moves from GlobalFoundries, Qualcomm, and Nvidia, breakthroughs in quantum computing and space-based systems, progress in photonic and neuromorphic hardware, and large-scale expansions in data center, cloud, and AI infrastructure.

Readings: Challenges in die stacking and multi-die reliability, advances in graphene and MEMS, momentum in quantum investment and algorithms, photonic microresonator breakthroughs, neuromorphic olfaction, and pitfalls in liquid cooling for data centers.

Funding news: Financings across (computing-related) AI, photonics, semiconductors, and cooling, with several early-stage rounds and a few larger deals, including Substrate’s $100 M venture round and Fireworks AI’s $250 M Series C.

Bonus: Highlights from NVIDIA’s GTC 2025, featuring new processors, hybrid quantum-classical interconnects, and open 6G software platforms. The company also unveiled a range of new products for AI data centers, networking, and industrial systems.

Deep Dive: Six Potential Scenarios of the Quantum Future

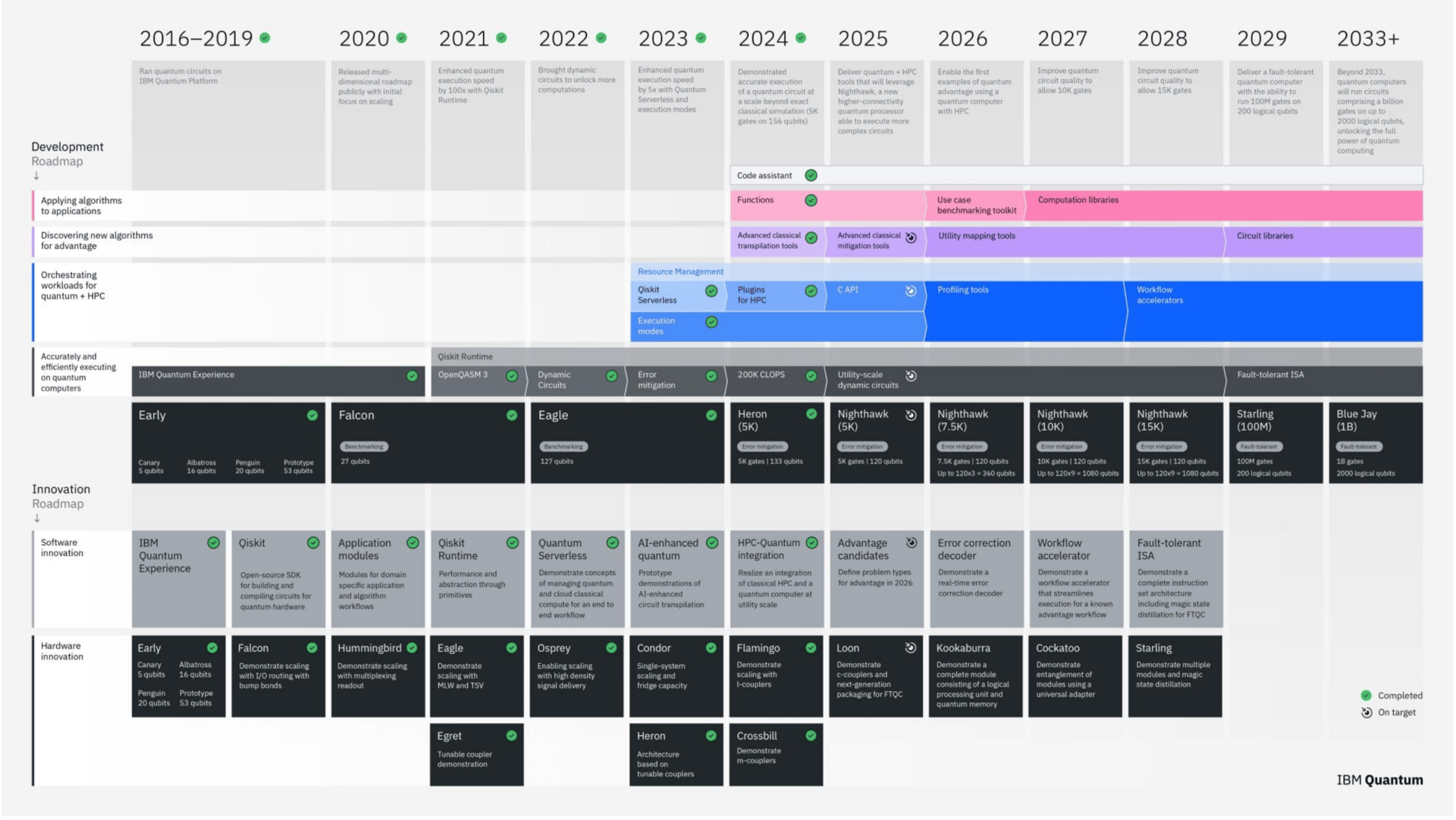

Quantum computing enters a decisive decade. Google’s Willow processor demonstrated below-threshold error correction with 105 qubits last December, IBM targets 200 logical qubits by 2028, and D-Wave continues to expand quantum annealing beyond 5,000 qubits. Yet the field remains divided between optimism and realism.

The following six scenarios outline how quantum computing could evolve from 2025 onward. They synthesize recent experimental results, corporate roadmaps, and fundamental research challenges.

Scenario 1 – Quantum Computing Proves Unscalable (Probability 5%)

Scaling to millions of qubits could prove impossible. Control electronics, cooling infrastructure, and error correction grow exponentially more complex, while data-loading and readout remain unsolved. Governments’ slow deployment of post-quantum cryptography and the small commercial market indicate limited confidence in near-term breakthroughs.

Summary: Quantum computing remains confined to research, sensing, and encryption niches.

Scenario 2 – Classical Algorithms Supersede Quantum (Probability 10%+)

Tensor-network methods now reproduce quantum results on conventional systems, even simulating IBM’s 127- and 433-qubit experiments. AI-driven algorithm design accelerates this trend, finding efficient classical alternatives for quantum circuits. As in past hardware revolutions, improved algorithms may narrow or eliminate quantum advantage.

Summary: Classical computing absorbs most benefits once expected from quantum, narrowing its practical relevance.

Scenario 3 – Quantum Scales but Underwhelms (Probability 1–10%)

Quantum systems continue to grow but deliver limited gains. Improvements of 10–30 percent in optimization or simulation are helpful but not transformative. Quantum memory and circuit depth remain constraints, and most demand comes from public funding rather than commercial users.

Summary: Quantum becomes a specialized accelerator for selected high-performance computing workloads.

Scenario 4 – Incremental Growth and Fault Tolerance Achieved (Probability 50%+)

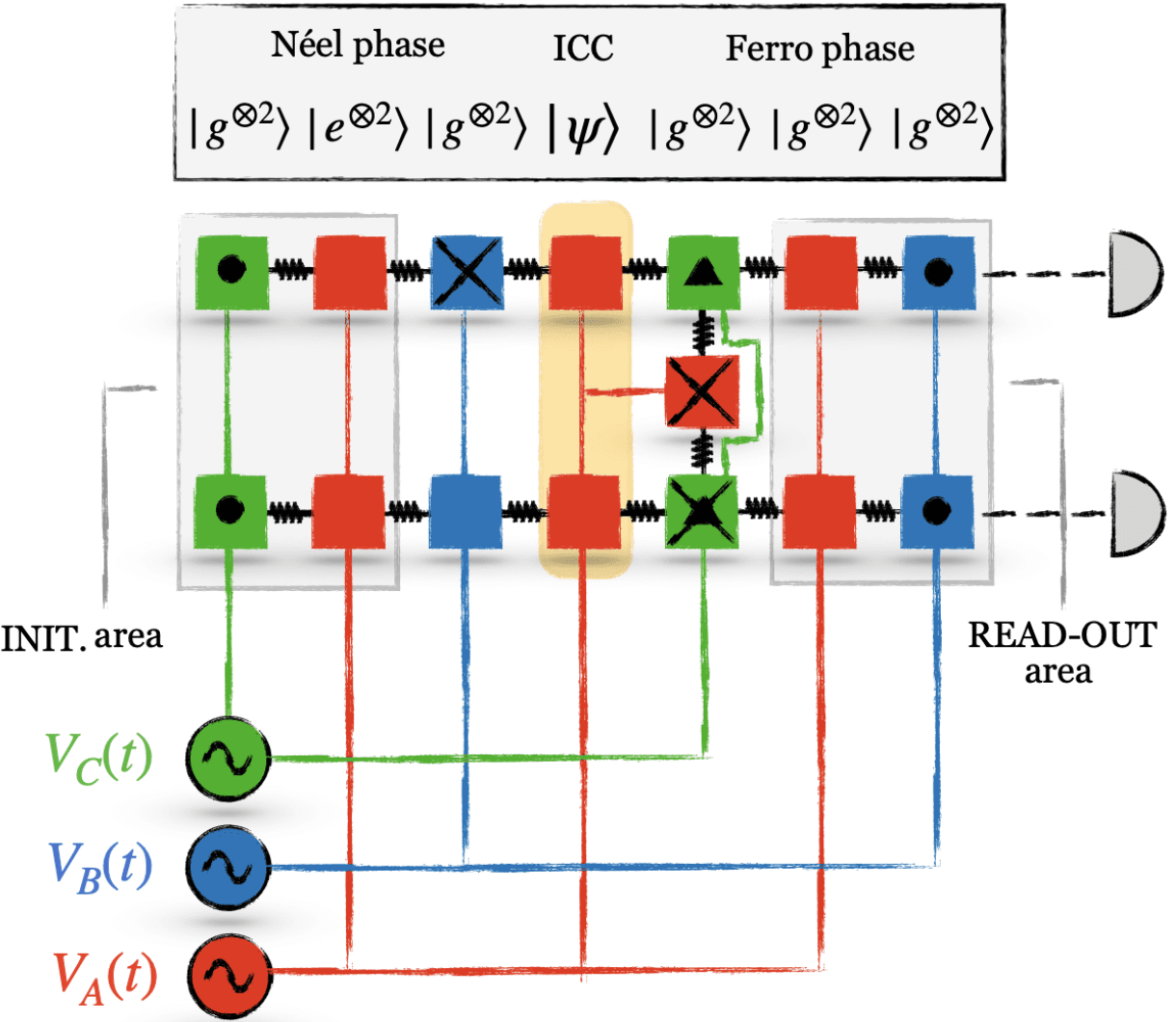

Quantum computing progresses steadily rather than explosively, with consistent improvements in qubit fidelity, coherence, and error correction. Below-threshold error correction, demonstrated by Google’s Willow processor, confirms the foundation for scalable systems. IBM’s roadmap reinforces this trajectory, outlining a gradual path from current 133-qubit processors toward 200 logical qubits by 2028. Early use cases in chemistry, finance, and logistics already show measurable, if modest, advantages.

Summary: Quantum computing becomes a stable, enterprise-level complement to classical HPC.

2025 IBM Quantum Roadmap. Source: Quantum Zeitgeist.

Scenario 5 – NISQ Era Persists and Fault Tolerance Fails (Probability 10–20%)

Full fault tolerance may remain out of reach due to correlated errors and high overhead. Instead, companies refine noise-mitigation and hybrid workflows that deliver usable results. Applications in optimization, logistics, and quantum machine learning grow despite imperfect systems.

Summary: A “good-enough” quantum industry emerges, profitable but limited in scope and long-term impact.

Scenario 6 – Disruptive Growth and Quantum Breakthrough (Probability 30–40%)

A decisive breakthrough, whether through topological, photonic, or another yet-unknown qubit design, enables stable, large-scale quantum computation. AI-assisted algorithm discovery accelerates progress, delivering exponential speedups for complex problems. Massive public and private investment, global talent shifts, and corporate readiness programs converge, fueling expectations of rapid deployment once the technology matures.

Summary: A genuine breakthrough transforms materials science, pharmaceuticals, and cryptography, reshaping computing itself.

Source: Quantum Computing Future – 6 Alternative Views of the Quantum Future Post 2025 (Quantum Zeitgeist, 2025)

Spotlights

💥 Semiconductor Industry Closes in on 400 Gb/s Photonics Milestone (IEEE Spectrum)

“The optical links that help connect the computers densely packed inside data centers may soon experience pivotal upgrades. At least two companies, Imec and NLM Photonics, now say they have either achieved 400-gigabit-per-second per lane data rates, the next key goal for data centers, or have these speeds within sight. Moreover, instead of relying on exotic new technologies, the devices of both teams are based on silicon.

[…]

Silicon photonics was generally not thought capable of scaling to 400 Gb/s per lane due to energy efficiency and other factors, says Lewis Johnson, chief technology officer and co-founder of NLM Photonics in Seattle.”

🦾 US startup Substrate raises $100 M for development of lithography tools to challenge ASML (Data Center Dynamics)

“US startup Substrate claims to have developed a chipmaking tool that can compete with the advanced lithography tools manufactured by Netherlands-based ASML.

The company also announced it raised $100 million in a funding round, which valued it at $1 billion. The round saw participation from Peter Thiel’s Founders Fund, General Catalyst, Allen & Co., Long Journey Ventures, Valor Equity Partners, and the CIA-backed not-for-profit firm In-Q-Tel.”

Headlines

Last week’s headlines featured major semiconductor moves from GlobalFoundries, Qualcomm, and Nvidia, breakthroughs in quantum computing and space-based systems, progress in photonic and neuromorphic hardware, and large-scale expansions in data center, cloud, and AI infrastructure.

🦾 Semiconductors

GlobalFoundries plans €1.1 billion investment to expand chip manufacturing in Germany (GlobalFoundries)

Socionext Unveils “Flexlets™”, a Configurable Chiplet Ecosystem to Accelerate Multi-die Silicon Innovation (PR Newswire)

Qualcomm Unveils AI200 and AI250 — Redefining Rack-Scale Data Center Inference Performance (Qualcomm)

Scientists create new type of semiconductor that holds superconducting promise (New York University)

⚛️ Quantum

99.9 %-Fidelity Quantum Computing: Optimization Overcomes Disorder, Achieving Required Pulse Accuracy (Quantum Zeitgeist)

Fault-Tolerant Quantum Computers Achieve ≈ 22 Years to 1 Day Runtime Reduction, Enabling Utility-Scale CO₂ Utilization (Quantum Zeitgeist)

2× 124-quantum-qubits performance: Digitized Counterdiabatic Quantum Sampling achieves efficient Boltzmann distributions & gains (Quantum Zeitgeist)

Quantum Optical Clock Demonstration on Underwater Autonomous Submarine a Step Toward GPS-Free Navigation (The Quantum Insider)

Optimizing Space Exploration – SemiQon Innovations Enhance Capabilities of Spaceborne Vehicles (The Quantum Insider)

⚡️ Photonic / Optical

Engineers test photonic AI chips in space (Phys.org)

Superlight Photonics continues without founder (Bits&Chips)

🧠 Neuromorphic

Neuromorphic computer prototype learns patterns with fewer computations than traditional AI (TechXplore)

The UK Multidisciplinary Centre for Neuromorphic Computing officially launches at the House of Lords (EdTech Innovation Hub)

💥 Data Centers

Nvidia to deploy Emerald AI’s orchestration software at 96 MW Aurora data center in Manassas, Virginia (Data Center Dynamics)

Nvidia and Deutsche Telekom to build data center in Munich, Germany – report (Data Center Dynamics)

☁️ Cloud

Microsoft Azure outage analysis (Capacity Global)

📡 Networking

New UWB application lab strengthens Infineon’s leadership in trusted connectivity system solutions (Infineon)

Nvidia makes $1 BN investment in Nokia (Tech.eu)

🤖 AI

Readings

This week’s reading list explores challenges in die stacking and multi-die reliability, graphene and MEMS in electronics, quantum investment and algorithms, photonic breakthroughs in microresonators, neuromorphic chips for olfaction, and pitfalls in liquid cooling for data centers.

🦾 Semiconductors

Lithography: How to Kill 2 Monopolies with 1 Tool (SemiAnalysis) (27 mins)

Greater China MEMS Industry On the Rise: Consumer Leads the Way (Yole Group) (9 mins)

Small Material, Big Impact: Graphene in Electronic Applications (IDTechEx) (12 mins)

Ensuring Reliability Becomes Harder In Multi-Die Assemblies (SemiEngineering) (25 mins)

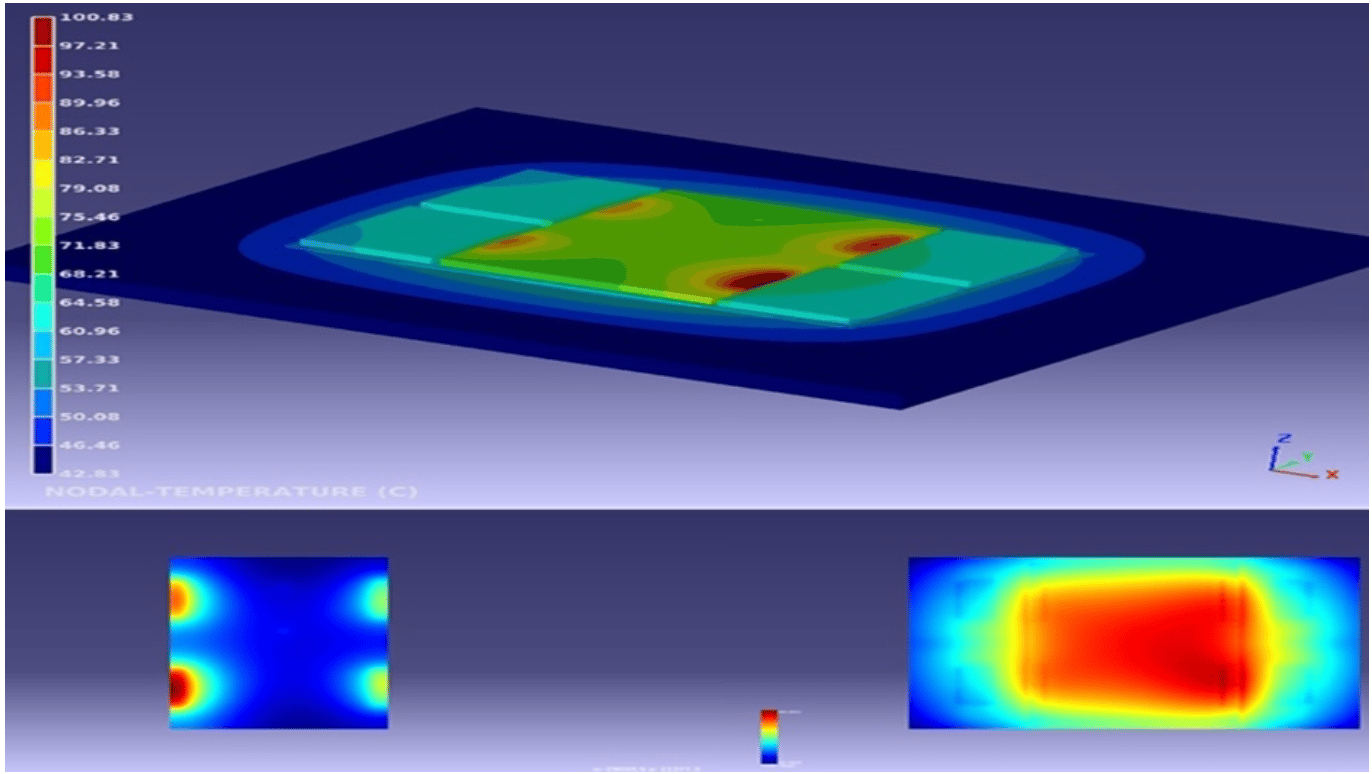

Thermal, Mechanical, And Material Stresses Grow With Die Stacking (SemiEngineering) (33 mins)

You Can Cool Chips With Lasers?!?! (IEEE Spectrum) (9 mins)

⚛️ Quantum

Quantum technology investment hits a ‘magic moment’ (McKinsey) (23 mins)

IBM Research Unveils New Quantum Algorithm Leveraging Group Theory for Potential Speedups (Quantum Zeitgeist) (25 mins)

⚡️ Photonic / Optical

Analysis of 2024 Photonics breakthroughs: Microresonators advance electron-photon control (Open Access Government) (5 mins)

Photonics Market worth $1,481.80 billion by 2030 at 6.3% (MarketsandMarkets) (5 mins)

🧠 Neuromorphic

Neuromorphic olfactory perception chips: towards universal odour recognition and cognition (Nature Reviews Electrical Engineering) (5 mins)

High-entropy oxide memristors: a frontier for brain-inspired electronics (EurekAlert!) (6 mins)

United States Neuromorphic Computing Market 2025 | Growth Drivers, Key Players & Investment Opportunities (OpenPR) (10 mins)

💥 Data Centers

How to ruin a perfectly good liquid cooling system (Data Center Dynamics) (8 mins)

Funding News

Last week’s financings spanned AI, photonics, semiconductors, and cooling. More than usual AI-related rounds were announced, with (as always) only those directly relevant to computing included here. Deals ranged from early-stage Seeds to a $250M Series C.

Amount | Name | Round | Category |

|---|---|---|---|

$11M | AI | ||

$16M | Photonics | ||

$21M | Cooling | ||

$24M | AI | ||

$80M (total) | Photonics | ||

$100M | Semiconductors | ||

$250M | AI |

Bonus: Highlights from NVIDIA’s GTC

At last week’s GTC (“GPU Technology Conference”) - NVIDIA’s annual flagship event - the company announced a series of major updates spanning data processing, quantum integration, and physical AI systems.

Highlights include:

BlueField-4 processor: A next-generation data processing unit combining Grace CPU and ConnectX-9 networking, delivering 800 Gb/s throughput to power large-scale AI factories.

NVQLink: A new high-speed interconnect that links quantum processors with NVIDIA GPUs, enabling hybrid quantum-classical computing across 17 builders and 9 research labs.

AI-RAN stack: AI-native 6G platform, now open-sourced to developers, serving as the software-defined foundation for programmable and intelligent wireless networks.

Aerial software: A 6G application built on the Aerial platform, developed with U.S. telecom partners to enable AI-driven networks, improve spectrum efficiency, and speed up next-generation deployment.

AI factory reference design: A blueprint for gigawatt-scale data centers aimed at modernizing government operations and securing national infrastructure.

IGX Thor processor: A high-reliability edge AI platform for industrial automation, robotics, and medical systems, built to handle real-time physical-world data.