Happy Monday! Here’s what’s inside this week’s newsletter:

Deep dive: Breaking the Memory Wall Pt. 1 examines how FMC and Majestic Labs address the widening gap between compute and memory, with material, node-, and system-level approaches that improve bandwidth, capacity, and persistence for memory-bound workloads.

Spotlights: IQM launches Halocene, a modular 150-qubit quantum computer line for error correction research, and SpiNNcloud delivers a brain-inspired supercomputer to the U.S. Neuromorphic Commons initiative.

Headlines: Semiconductor updates from Baidu, SK keyfoundry, and Tachyum, major quantum advances, continued progress in photonics and neuromorphic tech, and new data center and cloud investments shaping AI infrastructure.

Readings: HBM and NAND scaling, quantum simulation, photonic innovation, neuromorphic hardware for science and health, data center fragility, and AI funding dynamics.

Funding news: A high volume of rounds led by semiconductors and data centers, including major Series C raises for d-Matrix and FMC, and system-level investments in Majestic Labs and Firmus Technologies.

Bonus: Washington’s updated semiconductor strategy, where CSIS highlights flaws in the proposed Chip Security Act, while the Council on Foreign Relations outlines new recommendations to secure U.S. technology leadership.

Thanks to everyone who joined us at our Future of Computing conference in Paris! Huge thanks to iXcampus for hosting us, and to Creative Destruction Lab, HEC Paris, and Elaia for co-organizing ❤️

See you at the next one in London on March 24, 2026! We’re excited to once again have ConceptionX, Silicon Catalyst, IQ Capital, Barclays Eagle Labs, and XTX Ventures as supporting and co-organizing partners. You can sign up here 💡

Deep Dive: Breaking the Memory Wall Pt. 1 – How FMC and Majestic Labs Advance the Memory Stack

In light of two major funding rounds last week, we take a closer look at the technologies behind them. FMC raised €100M to commercialize its DRAM+ and 3D CACHE+ memory chips, while Majestic Labs raised more than $100M to build AI servers with 1000× the memory capacity of a top-tier GPU.

The Memory Wall

Chips have scaled rapidly, but memory has not kept pace in capacity, bandwidth, access latency, or energy efficiency. Systems now spend much of their time and power budget moving data between caches, DRAM, and storage rather than performing useful computation, a limitation known as the “Memory Wall”.

As models increase in size and complexity, this bottleneck becomes more pronounced. Workloads such as large context windows, mixture-of-experts models, graph processing, and agentic systems require frequent access to large datasets, intensifying data movement and driving power consumption in AI data centers.

Overcoming the Memory Wall requires progress at several layers of the stack:

materials and device structures that define the behavior of memory cells (FMC)

node-level memory architectures that shape how data flows inside a compute node (FMC)

system-level connections that determine how memory interacts with accelerators (Majestic Labs)

FMC: Memory Innovation in Materials and at the Node Level

A long-standing trade-off in memory design is the choice between speed and persistence (ability to retain data without power). DRAM and SRAM (fast but non-persistent) lose their data when powered down. In contrast, storage (persistent but slow) retains data but cannot serve AI workloads at the required speed.

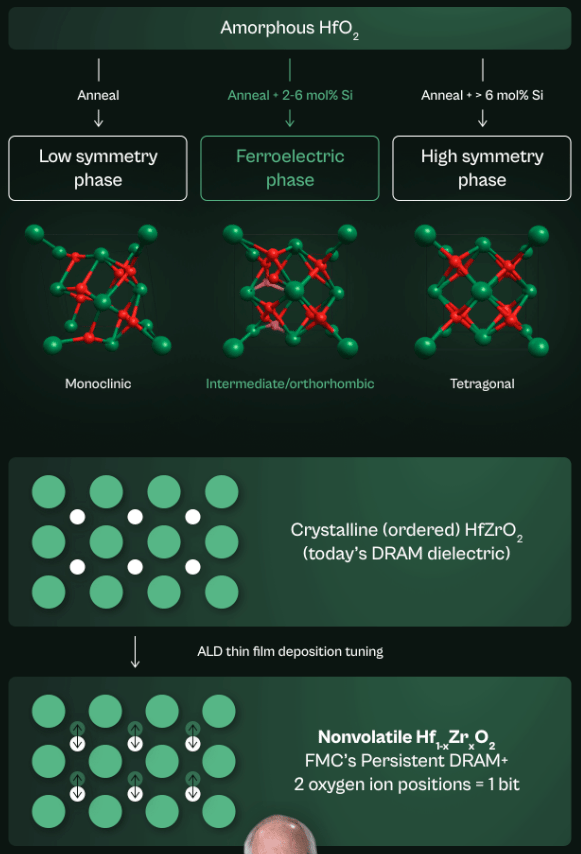

FMC incorporates ferroelectric hafnium oxide into the memory-cell structure to introduce persistence into DRAM-class and SRAM-class memory while remaining compatible with standard semiconductor production lines.

What FMC is building

DRAM+

DRAM+ is a DRAM-class technology that adds non-volatility, allowing data to remain stored during power down. This reduces the need for frequent reload cycles and lowers data movement between fast volatile memory and slower persistent storage. DRAM+ is intended for high-performance databases, AI training and inference clusters, edge deployments, automotive systems, and industrial environments.

3D CACHE+

3D CACHE+ is an SRAM-class cache architecture that combines higher density, lower standby power, and persistence. According to FMC, it reaches ten times the density of conventional SRAM and reduces standby power by a factor of ten while retaining data without power. It is designed for next-generation chiplet architectures and targets high-performance AI inference systems that depend on fast, high-capacity on-package cache.

This places FMC’s approach inside the compute node, where persistence reduces data movement between fast memory and storage.

Majestic Labs: Memory Innovation at the System Level

Another long-standing trade-off is the imbalance between compute-rich and memory-poor accelerator systems. GPU servers offer significant FLOPs (floating-point operations) but relatively limited on-board memory. As a result, organizations often scale out multiple racks simply to obtain the memory capacity needed for large models, not to add more compute.

Majestic Labs works on reducing this imbalance by using custom accelerator and memory interface chips to decouple memory from compute and provide far more memory capacity within a single server.

What Majestic Labs is building

Majestic Labs’ servers use custom accelerator and memory interface chips to disaggregate memory and connect compute to large external memory pools. Each server supports up to 128 TB of high-bandwidth memory, almost one hundred times more than a typical GPU server and roughly equivalent to the capacity and bandwidth of about ten racks.

Majestic Labs states that this architecture offers up to one thousand times the memory capacity of a top-tier GPU and can run workloads that keep large contexts or model states in memory, such as large context windows, mixture-of-experts models, agentic systems and graph neural networks. The system runs natively with common AI frameworks and remains fully programmable beyond transformer-based models.

This places Majestic Labs’ approach at the system level, where disaggregation increases total memory per server and reduces scale-out overhead.

Unfortunately, since the website of Majestic Labs is still in stealth mode, we could not find visuals explaining the technical details :-(

Sources: FMC 1, FMC 2, FMC 3, Tech Funding News, Business Wire (2025)

Spotlights

“IQM Quantum Computers, a global leader in superconducting quantum computers, today announced the launch of its new product line called IQM Halocene. The new product line is based on open and modular on-premises quantum computers designed for quantum error correction research, a leap towards the next generation of quantum computers.

The first release of IQM Halocene will be a 150-qubit quantum computer with advanced error correction functionality. The system will enable users to advance their error correction capabilities, error correction research and to create intellectual property with logical qubits. In addition, the Halocene product line will allow execution of Noisy Intermediate Scale (NISQ) algorithms and the development of error mitigation techniques.”

⚡️ SpiNNcloud Delivers Brain‑Inspired Supercomputer to THOR: A Landmark Neuromorphic Commons Initiative in the U.S. (ACCESS Newswire)

“SpiNNcloud Systems today announced that its cutting-edge supercomputing platform, built on the SpiNNaker2 architecture, has been adopted by the Neuromorphic Commons (THOR), marking a major milestone in the advancement of brain-inspired high-performance computing (HPC) and artificial intelligence (AI).

The SpiNNaker2-based system now ranks among the top four largest neuromorphic computing platforms globally, simulating over 393 million neurons. Designed by Professor Steve Furber, the original architect of ARM, SpiNNaker2 leverages a massively parallel array of low-power processors to deliver scalable, energy-efficient performance for both AI and neuroscience workloads.”

If you’d like to learn more about what SpiNNcloud is building, check out our interview!

Headlines

Last week’s headlines featured new semiconductor developments, major quantum advances, progress in photonics and neuromorphic tech, big data center and cloud investments, and fresh shifts in AI.

🦾 Semiconductors

Baidu answers China’s call for home-grown silicon with custom AI accelerators (The Register)

SK keyfoundry, accelerates development of SiC-based compound power semiconductor technology (SK keyfoundry)

Tachyum unveils 2 nm Prodigy with 21× higher AI rack performance than the Nvidia Rubin Ultra (Business Wire)

Tsavorite Scalable Intelligence sets new frontier for AI efficiency and scale with the Omni Processing Unit (Tsavorite Scalable Intelligence)

⚛️ Quantum

China’s new photonic quantum chip promises 1000-fold gains for complex computing tasks (The Quantum Insider)

Evidence of exotic superconductivity found in twisted graphene opening new paths for quantum devices (The Quantum Insider)

SECQAI Tapes Out CHERI TPM with Post-Quantum Cryptography for Secure Computation (The Quantum Insider)

Q-CTRL connects Fire Opal with RIKEN’s IBM quantum system two for hybrid quantum-classical computing (The Quantum Insider)

IBM reveals new quantum processors, software and algorithm advances (The Quantum Insider)

Haiqu demonstrates improved anomaly detection and pattern recognition with IBM quantum computer (The Quantum Insider)

⚡️ Photonic / Optical

Ravi Pradip’s CoreDAQ drops the barrier to entry for silicon photonics experimentation (Hackster.io)

New photonic system performs tensor maths at speed of light for next-gen AI processing (Interesting Engineering)

🧠 Neuromorphic

DorsaVi Shares Jump 18% on Acquisition of Neuromorphic Technology (Stocks Down Under)

💥 Data Centers

Anthropic announces $50 billion data center plan (TechCrunch)

Google announces €5.5 billion investment in Germany, including AI infrastructure, through 2029 (Google Cloud)

☁️ Cloud

Google opens sovereign cloud hub in Germany (SDxCentral)

📡 Networking

Megaport to acquire Latitude.sh, creating an industry-leading compute and network-as-a-service platform (Business Wire)

🤖 AI

Ex-OpenAI exec Mira Murati’s Thinking Machines Lab nears $50B valuation (Tech Funding News)

Readings

This reading list covers HBM and NAND scaling, quantum simulation, photonic innovation, neuromorphic hardware for science and health, data center fragility, and AI funding dynamics.

🦾 Semiconductors

HBM leads the way to defect-free bumps (SemiEngineering) (18 mins)

New rules put the squeeze on semiconductor gray market (SemiEngineering) (21 mins)

Unlocking z-pitch scaling for next-generation 3D NAND flash (imec) (18 mins)

Photolithography Materials Market Growth: Continuing Growth of EUV and Advanced Node Demand (Techcet) (4 mins)

Memory Industry to Maintain Cautious CapEx in 2026, with Limited Impact on Bit Supply Growth (TrendForce) (7 mins)

⚛️ Quantum

Universal Quantum Simulation of 50 Qubits on Europe’s First Exascale Supercomputer (arXiv) (34 mins)

Rigetti Computing Reports Third Quarter 2025 Financial Results; Provides Technology Roadmap Updates for 2026 and 2027 (Rigetti Computing) (25 mins)

⚡️ Photonic / Optical

Photonics start-ups powering Europe’s innovation (Optics.org) (11 mins)

Gyromorphs should block light in all directions (Optica OPN) (7 mins)

Enhanced optical bistability in slotted photonic crystal structure for microwave frequency generation (Nature) (43 mins)

🧠 Neuromorphic

Solving sparse finite element problems on neuromorphic hardware (Nature) (64 mins)

A neuromorphic approach to early arrhythmia detection (Nature) (54 mins)

Ferroelectric Neuromorphic Memory: A Step Toward Bio-Inspired Computing (Bioengineer.org)(15 mins)

Neuromorphic hardware tackles sparse finite element challenges (Bioengineer.org) (12 mins)

💥 Data Centers

Data centers at risk: the fragile core of American power (Foreign Policy Research Institute) (17 mins)

Are data centers the new oil fields? (TechCrunch) (32 mins – Podcast)

🤖 AI

The circular money problem at the heart of AI’s biggest deals (TechCrunch) (26 mins – Video)

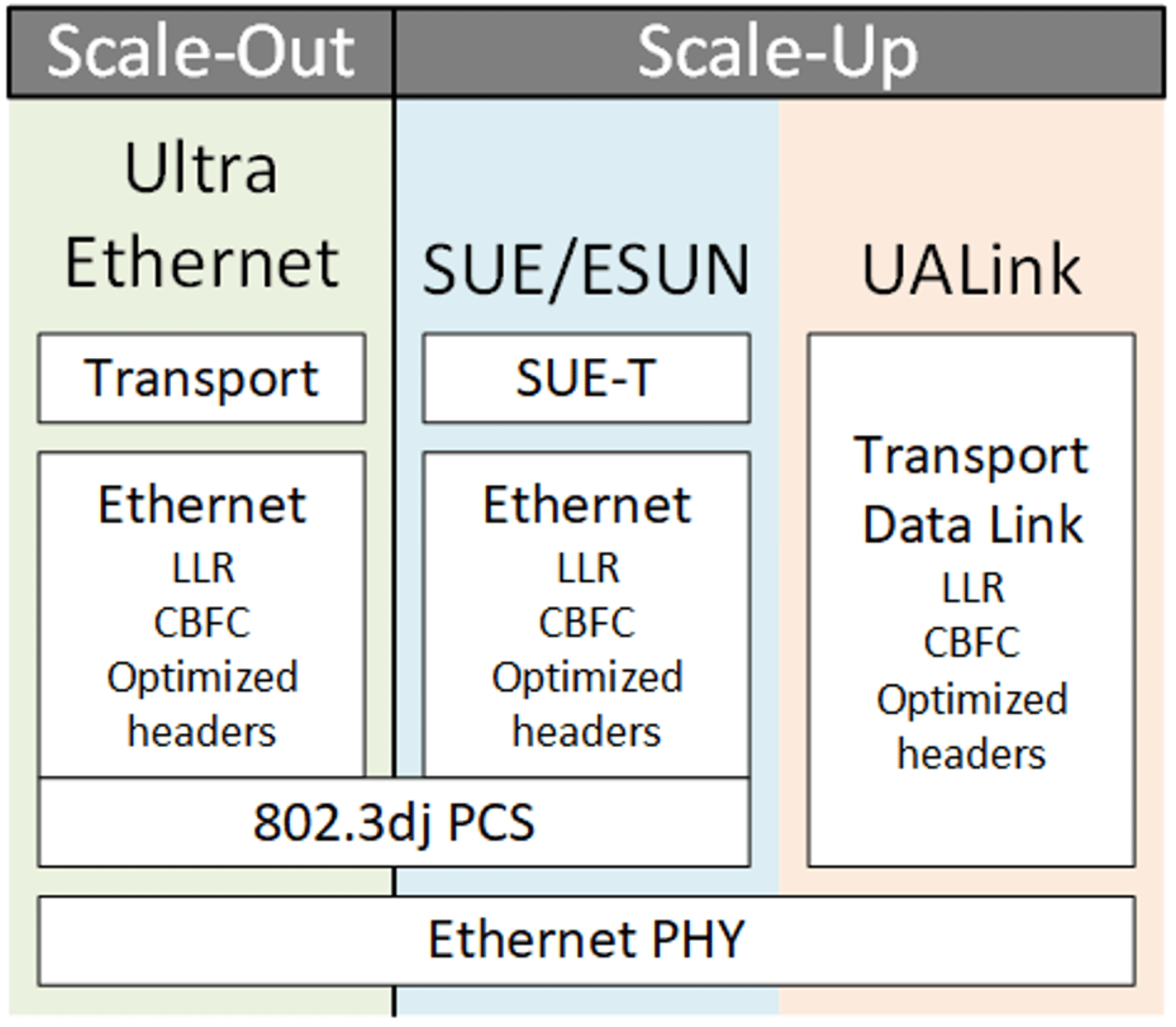

Multiple AI scale-up options emerge (SemiEngineering) (23 mins)

Funding News

Last week brought an unusually high number of rounds, led by significant activity in semiconductors and data centers. Semiconductors accounted for the majority of deals across all stages, including two major Series C raises. Data centers contributed the largest individual financing, while quantum and networking remained relatively small in comparison.

Amount | Name | Round | Category |

|---|---|---|---|

€161K | Quantum | ||

€5M | Semiconductors | ||

$6M | Semiconductors | ||

$11M | Networking | ||

$40M | Semiconductors | ||

$50M | Data Centers | ||

$50M | Semiconductors | ||

$100M | Data Centers | ||

€100M | Semiconductors | ||

$275M | Semiconductors | ||

$327M | Data Centers |

Bonus: Inside Washington’s Updated Semiconductor Strategy

Two major policy analyses from last week look at how Washington aims to protect and rebuild its semiconductor leadership.

CSIS: Gaps in the U.S. Proposed Chip Security Act

A set of weaknesses in the proposed chip-level security mechanisms for advanced GPUs.

Key points

Location verification cannot be trusted if trusted execution environments are compromised, which leaves a core enforcement mechanism unsupported.

Telemetry and location signals can be spoofed, making it possible for diverted chips to appear compliant even when they are not.

Chips deployed in firewalled, intermittently connected or air-gapped data centers cannot perform the required check-ins, which undermines any post-shipment monitoring.

The verification model depends on a global network of trusted servers that does not exist today, leaving the mechanism without the infrastructure it requires to function.

Missing or irregular verification signals cannot be interpreted reliably, since non-response is common in real data center environments and cannot be used to identify diversion.

The Architecture of AI Leadership: Enforcement, Innovation, and Global Trust (Center for Strategic and International Studies)

Council on Foreign Relations: Recommendations to Advance the U.S. Chip Industry

A set of policy recommendations to strengthen the U.S. semiconductor supply chain.

Key points

Onshore the manufacturing of critical semiconductor inputs and components, including chemicals, PCBs and IC substrates.

Secure critical minerals by expanding the National Defense Stockpile, accelerating permitting and advancing recovery and substitution technologies with partners and allies.

Strengthen the workforce by supporting machinists, electricians and other trades essential for semiconductor manufacturing.

Establish an Economic Security Center at the Department of Commerce to improve coordination, technical expertise and public-private collaboration.

Use Department of Defense procurement to accelerate development of a utility-scale quantum computer.

U.S. Economic Security: Winning the Race for Tomorrow’s Technologies (Council on Foreign Relations)